What is augmented recovery generation?

Augmented retrieval generation* is the process of optimizing the output of a large language model. It therefore calls on a reliable knowledge base, external to the data sources used, to train it before generating an answer.

This is where you introduce your own data related to your area of expertise.

*

Large Language Models (LLMs) are trained with large volumes of data. They use billions of parameters to generate original results for tasks such as answering questions, translating languages and completing sentences. Augmented retrieval generation extends the already powerful capabilities of LLMs to specific domains or to an organization's internal knowledge base. All without the need to retrain the model. It's a cost-effective approach to enhancing LLM results so that they remain consistent, accurate and useful in a wide range of contexts.

Why is augmented recovery generation important?

LLMs are a key artificial intelligence (AI) technology. They power intelligent chatbots and other natural language processing applications. The aim is to create robots capable of answering users' questions in a variety of contexts by cross-referencing authoritative sources of knowledge. Unfortunately, the nature of LLM technology introduces unpredictability into LLM responses. In addition, LLM training data is static and introduces a cut-off date on the knowledge available to it.

Known LLM challenges include:

- Presenting false information when there is no answer.

- Present obsolete or generic information when the user expects a specific, up-to-date response.

- Create an answer from non-authoritative sources.

- Create inaccurate answers due to terminological confusion, where different training sources use the same terminology to talk about different things.

You can think of the broad language model as a new, over-enthusiastic employee who refuses to keep up with the times, but will always answer all questions with absolute confidence. Unfortunately, such an attitude can have a negative impact on user trust. And that's not something you want your chatbots to emulate!

Augmented retrieval generation is an approach that solves some of these challenges. It redirects the LLM to retrieve relevant information from predetermined authoritative knowledge sources. Organizations have greater control over the generated text output. And users have insight into how the LLM generates the answer.

Points to remember

- GAN models create knowledge repositories based on company-owned data. The repositories can be continually updated to help generative AI deliver timely, contextual responses.

- RAG is a relatively new artificial intelligence technique. It can improve the quality of generative AI by enabling large language models (LLMs) to exploit additional data resources without retraining.

- Chatbots and other conversational systems that use natural language processing can greatly benefit from RAG and generative AI.

- Implementing RAG requires technologies such as vector databases. These will enable the rapid coding of new data, and research on these data to feed the LLM.

How can I get my enhanced recovery generation?

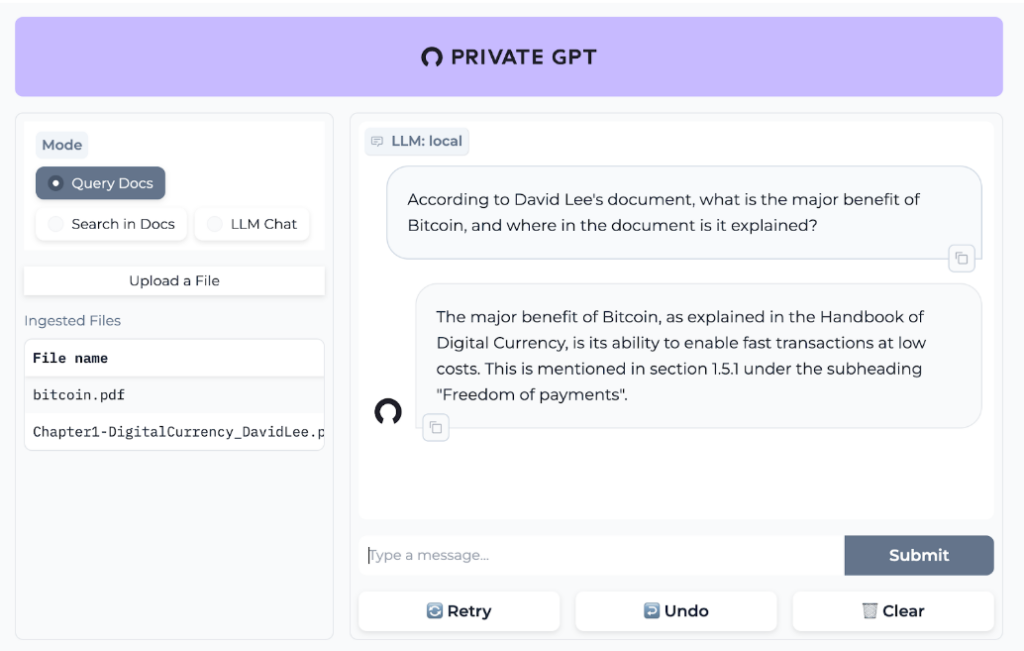

How can I have my own AI that answers questions in my area of expertise and guarantees the protection of my data?

You'd like to experiment with the possibility of having your own RAG.

I'm going to show you a simple method of achieving this in a linux (debian) environment(https://github.com/imartinez/privateGPT).

This solution is not intended for production use. It's simply there to show you what a RAG can do for you.

You will need to execute the following instructions:

git clone https://github.com/imartinez/privateGPT

cd privateGPT/

sudo apt-get install libgl1-mesa-glx libegl1-mesa libxrandr2 libxrandr2 libxss1 libxcursor1 libxcomposite1 libasound2 libxi6 libxtst6

curl -O https://repo.anaconda.com/archive/Anaconda3-2023.09-0-Linux-x86_64.sh

chmod ./Anaconda3-2023.09-0-Linux-x86_64.sh

./Anaconda3-2023.09-0-Linux-x86_64.sh

conda create -n privategpt python=3.11

conda activate privategpt

conda install poetry

conda update keyring

sudo apt-get install build-essential

poetry install --with ui,local

poetry run python scripts/setup

pip install llama-cpp-python

PGPT_PROFILES=local make run

You can now upload pdf files containing your information.

Read more articles in our AI (artificial intelligence) series:

What can CIOs expect from AI in 2024?

Atlassian Intelligence features